Research Intern — Meta Reality Labs × Augmented Human Lab

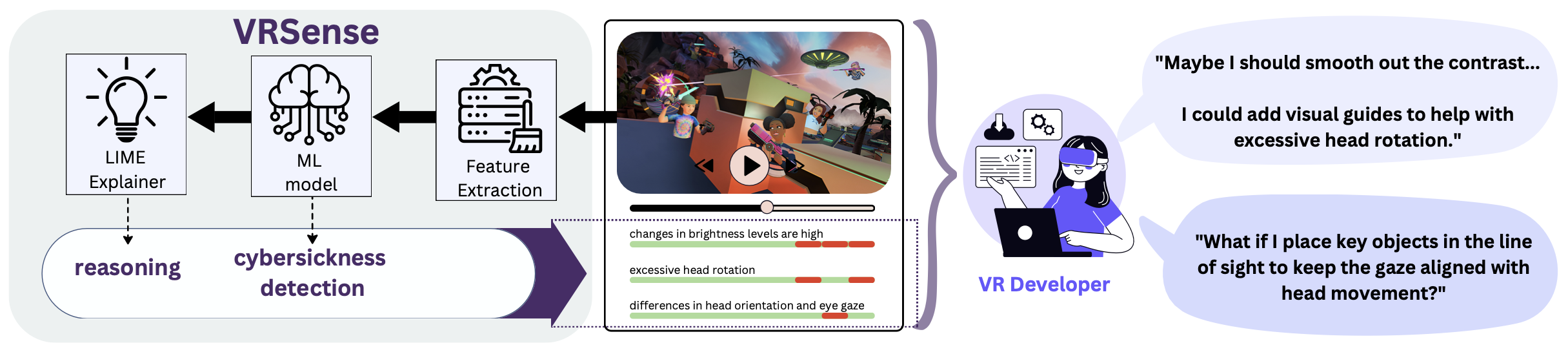

- Led the design and execution of a large-scale VR gameplay user study (N=150), investigating motion sickness during active VR interactions.

- Collected and synchronized multimodal high-frequency data including eye tracking, head motion, and physiological signals in real-world XR settings.

- Developed machine learning models to predict real-time discomfort and cybersickness onset during gameplay.

- Built robust data pipelines for aligning heterogeneous sensor streams at scale.

- Translated model outputs into actionable insights for VR game evaluation on VR gaming platforms.